Coding results of reliability test cannot replace formal coding. It is necessary to complete reliability test coding before proceeding with formal coding.

Research methodology requires reliability test among coders before formal coding. Whether using traditional content analysis or big data content analysis, two or more coders are required to work independently to judge the characteristics of a piece of information or content and reach a consistent conclusion. This consistency is quantified and referred to as inter-coder reliability.

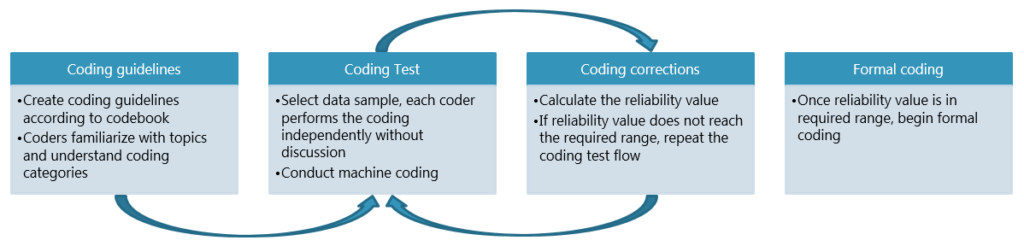

How to conduct reliability test?

Reliability value can be calculated using necessary tools, either manually (using formulas) or with computer programs. Taking the example of inter-coder reliability assessment in big data content analysis, the specific operational steps are as follows:

- Create coding guidelines based on the codebook. The guidelines should be clear and consistent, helping coders become familiar with the topic, understand coding categories, and ensure that all coders understand the meanings of the categories.

- Perform coding test. Select a batch of data as the test sample for coding test. During the testing process, each coder should code independently without discussing or guiding each other. If machine coding is used, the reliability test of machine coding can be directly executed (machine coding reliability test can be done with a single click on DiVoMiner).

- Conduct coding corrections. If the reliability value does not reach the required range, the coding test needs to be re-performed. Before retesting, coders should be trained again, especially regarding categories with significant differences in coding results. If machine coding is involved, the keyword options in the codebook need to be rechecked and revised, refining the category options as much as possible, and then execute the machine coding reliability test again.

- Formal coding. When all coders achieve the desired reliability, formal coding can begin.

A comprehensive reliability report should include the following information:

- The quantity of samples used and the reason for reliability analysis.

- The relationship between the reliability samples and the whole database: whether they are part of the actual data or additional samples.

- Coders’ information: number of coders (must be 2 or more), backgrounds, and whether researchers are coders.

- The quantity of coding tasks assigned to each coder.

- Selection and justification of reliability indicators.

- Inter-coders’ reliability for each variable.

- Coders’ training time.

- Approach to handle disagreements during the coding process.

- Where readers can obtain detailed coding guidelines, procedures, and codebooks.

- Reporting the reliability levels for each variable, rather than only reporting the overall reliability for all variables.