Inter-coder reliability refers to the extent to which one’s coding procedures will result in the same results when the same text is coded by different people. (Lombard, Snyder-Duch, & Bracken, 2004).

The process of reliability test is to invite all coders involved in the study to code the same data, then calculate the inter-coder reliability results. When the coefficient results reach an acceptable level, formal coding can be performed. If the coefficient results do not meet the research requirement, correct the coding results, then do the test coding until the inter-coder reliability reaches a reasonable level.

To conduct reliability test on DiVoMiner, first select some samples from the [Coding Library] to the [Test Library], and all coders need to complete the task (each coder does the same coding) in the [Coding Test] page, and then the administrator calculates the reliability coefficients in the [Reliability Calculation] page. During or after this period, the [Coding Tracking] page can be used to check the coding results of all coders to understand the consistency. The specific operation method is as follows:

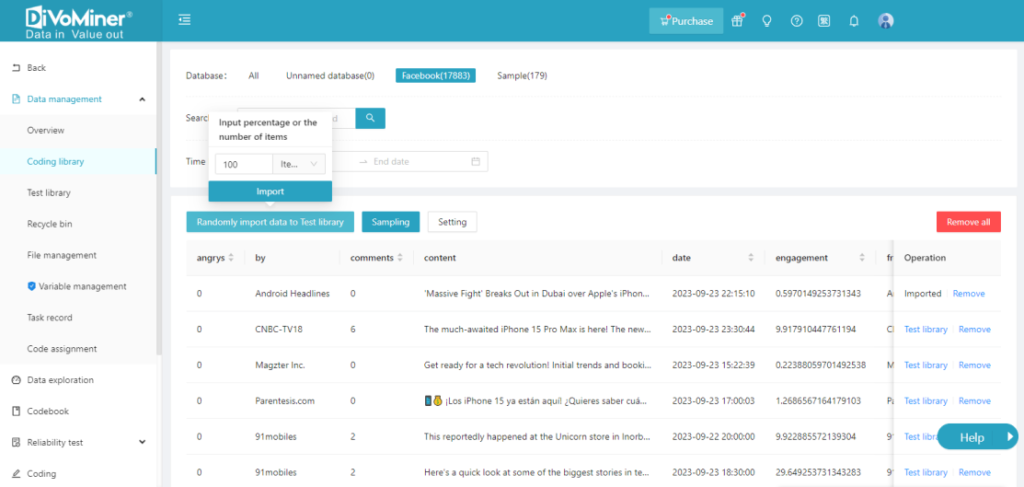

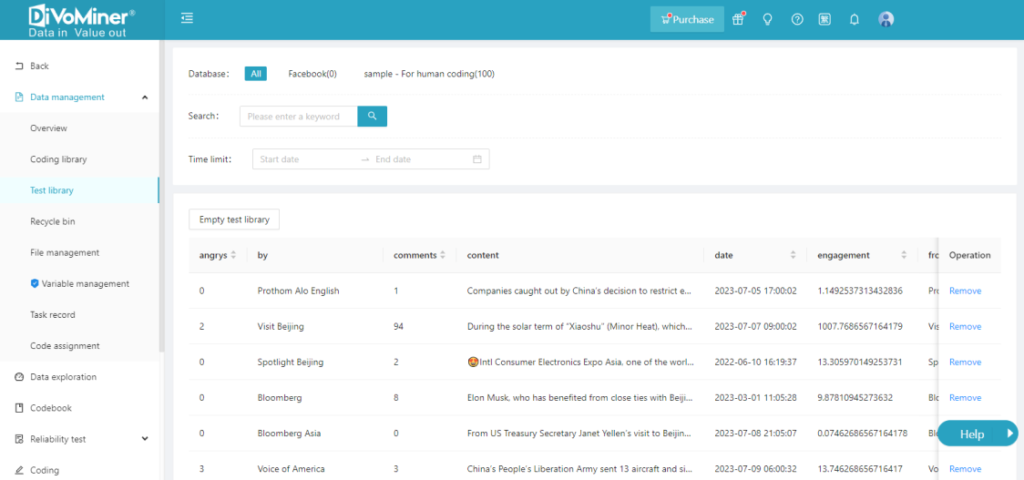

Step 1: Create a [Test Library]. Click [Coding Library], then click [Randomly Import Data to Test Library]. Users can also select a signal data, individually imported into the test library, click on the right side of the data [Test Library]. You can go to the [Test Library] to view the imported data.

If [Randomly Import Data to Test Library] button is grey, please select a specific database instead of selecting [All], to ensure normal sampling.

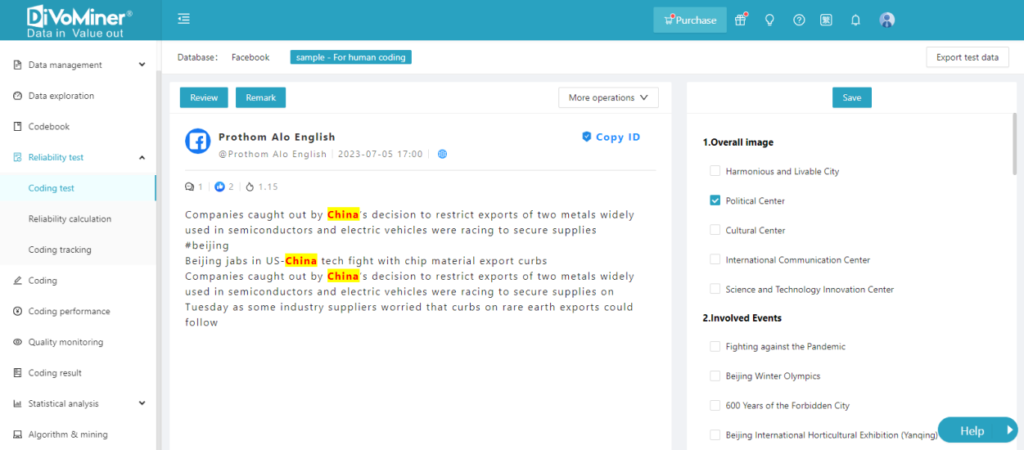

Step 2: Complete the coding test. Involve all coders in the [Reliability Test] section and code the assigned tasks.

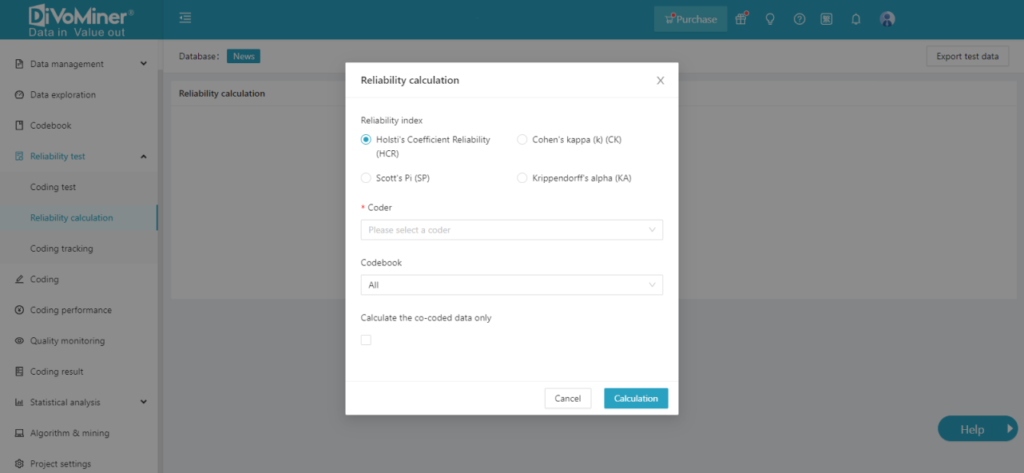

Step 3: After all coders complete the coding test, in [Reliability Calculation], select the coder and the reliability index and click [Calculation] to get the reliability result.

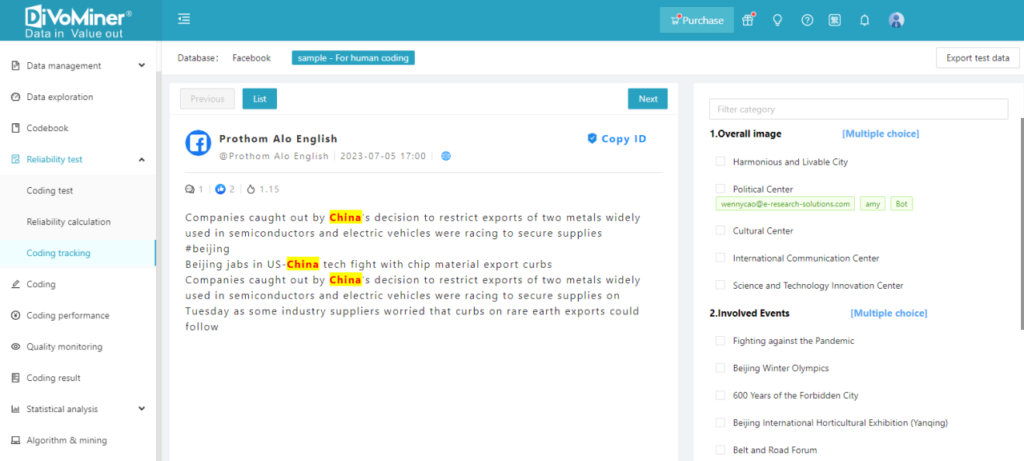

Step 4: Easily check the results of coder’s coding test in [Coding Tracking] to see coding discrepancies.

Step 5: If the inter-coder reliability among coders reaches an acceptable level, formal coding can be conducted. Otherwise, multiple rounds of coding test need to be repeated until the reliability coefficient reaches a credible level.

For questions with low reliability coefficients, the coders need to be re-trained, then conduct the reliability test again.

To rebuild the [Test Library], first to click [Empty Test Library] in [Test Library], and all the coding results in the test library will be cleared. Next, re-establish the test library in [Coding Library], and let each coder re-code independently in the new test library.

Click to see: Does machine coding require a reliability test?

Lombard, M., Snyder-Duch, J., & Bracken, C. C. (2004). A call for standardization in content analysis reliability. Human Communication Research, 30(3), 434-437.