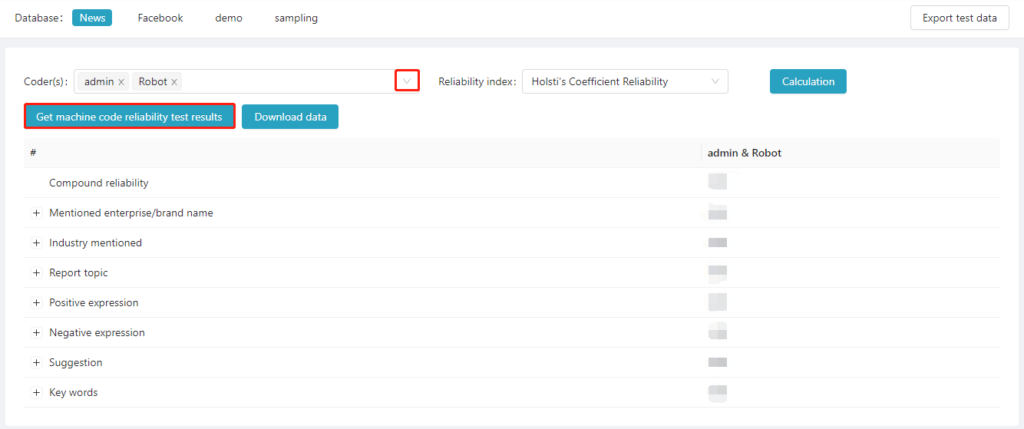

After precoding, click “Reliability calculation” to check the reliability between coders overall and on specific categories. The reliability coefficient is calculated to observe whether different coders have reached an acceptable level of agreement with a consistent coding scheme. If the desired reliability level is not reached, coders need to be trained and instructed again to ensure the desired level of reliability. After that, you can start formal coding. In the following reliability test results, the Composite Reliability represents the overall reliability results of all categories. Click “+” to view the reliability results of specific options under each category.

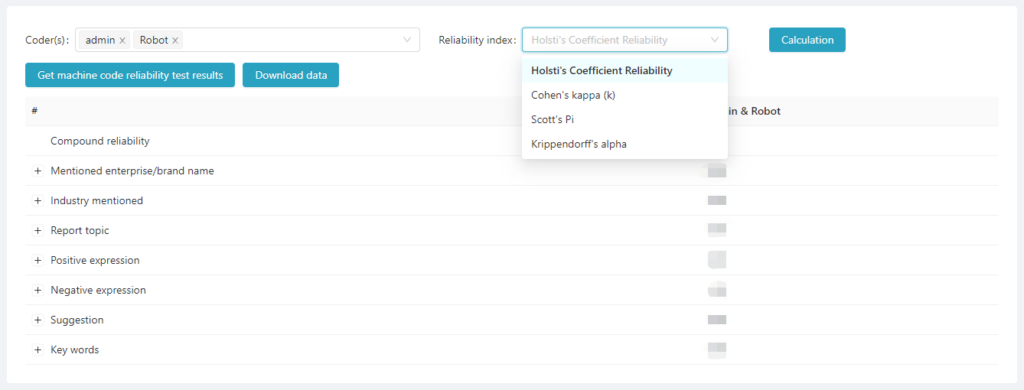

The platform currently provides four commonly used reliability test formulas: Holsti’s Coefficient, Cohen’s Kappa (k), Scott’s Pi, and Krippendorff’s alpha. The application of the formula greatly improves the authority of the reliability test. The platform can directly check the inter-coder reliability on each category question, convenient for coding correction and supervision.

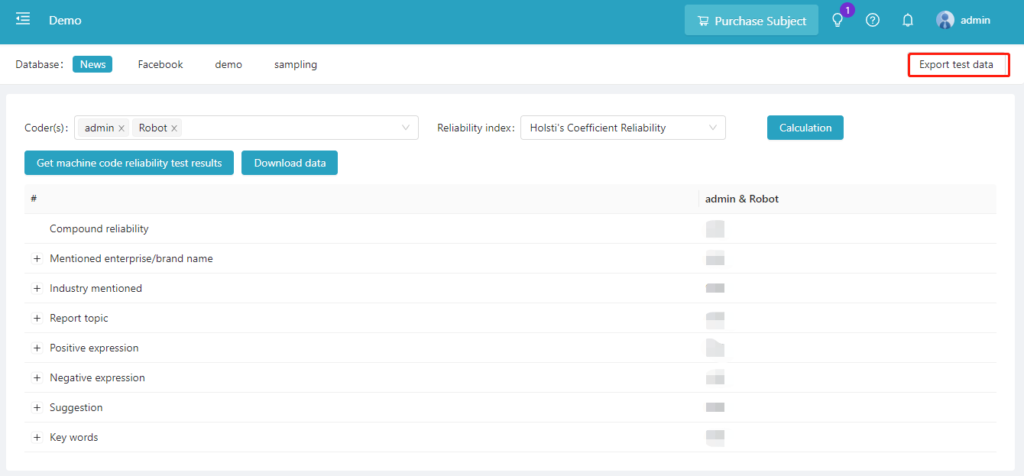

After coding, if you want to view the coding result of each coder, click “Export Testing Data” to download the coding results of the coder.

If you want to evaluate the accuracy of machine coding results, click “Update machine code reliability test results”, then compare the machine coding results with the manual coding results.